Copying files from multiple SharePoint sites onto Azure Data Lake (Gen 2) using Azure Logic Apps and Azure Data Factory

An interesting area I was reading up on

recently was to Ingest data from a SharePoint folder to Azure Data Lake (Gen

2). There were quite a few interesting articles on this topic, some of which

are listed below,

Source: MSSQL tips

Source: The Tech Platform

Like these articles suggests, I too looked

at the approach of using Logic Apps to do the same. However, I had multiple SharePoint

sites and folders to ingest data from. The process was too tedious to be done

manually and hence a solution through which this could be semi-automated was required.

The solution is broken into 2 parts,

- Azure Logic App – Holds the steps to read files on a particular SharePoint folder and writes it into Azure Data Lake Storage (Gen 2).

- Azure Data Factory – Automates the process by passing parameters to the Logic App (Sites and Paths) and assists in managing trigger times.

- Azure Subscription.

- A service account created on the Azure Active Directory.

- Access to the required SharePoint folders through the above service account.

- A source with information regarding sites (SQL, Excel file on Azure Data Lake. Etc.)

Creating the logic app

- Follow instruction mentioned on the site below to create a logic app resource. https://docs.microsoft.com/en-us/azure/logic-apps/quickstart-create-first-logic-app-workflow#create-a-logic-app-resource

- Open a blank logic app on the logic app designer and add a “When a HTTP request is received” trigger. This is to be able to run it on demand.

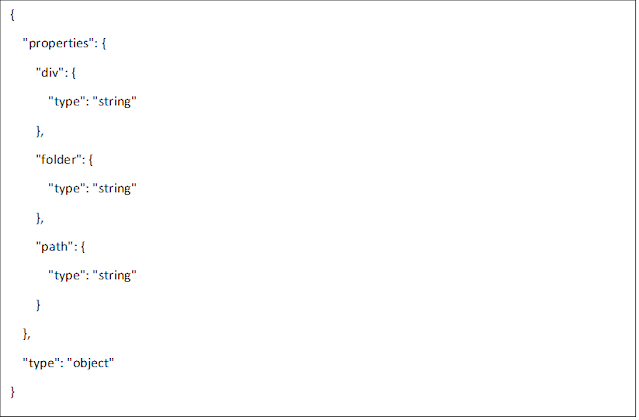

- Add a request body (JSON object) with the required number of properties. (Remember these properties will be used to send data from Azure Data Factory to the Logic App). For this purpose, I’ve initialized 3, namely div, folder and path.

The request body should like this.

- Add a SharePoint “List Folder” action > Fill Site Address and File identifier with the appropriate dynamic content (The properties initialized on the body of the trigger above).

Tip: remember to authenticate this action using the service account with permission

Tip: Encode the folder path (activity uses double encoding)

Mine looked like this,

encodeUriComponent(triggerBody()?['path'])

|

Expression |

Definition |

|

encodeUriComponent(triggerBody()?['path']) |

Encoded folder path. Path is an output property from the trigger. |

- Add in a For each operator > Select the Body of the previous step through dynamic content for Select an option from previous steps.

- Within the For each loop add a SharePoint "Get file content" action > For Site address add the “folder” property off the trigger body > For File Identifier add “Path” from the output properties off the SharePoint List Folder action. Tip: remember to authenticate this action using the service account with permission

- Add a Create blob (V2) action within the for each loop > Create connection to the destination Storage Account

- On folder path add a folder path of choice. I’ve used a dynamic path like below, Tip: remember to include the name of the blob in the path

Blob Name/Parent Folder/Category

passed through the trigger/Year/Month/Date/Folder with file name/File with

datetime prefix

Mine looked something

like this,

concat(playarea/SharePointFiles/',triggerBody()?['div'],'/',formatDateTime(utcNow(),'yyyy/MM/dd'),'/',substring(items('For_each')?['Name'],0,indexOf(items('For_each')?['Name'],'.')))

|

Expression |

Definition |

|

triggerBody()?['div'], |

Category passed from ADF through http trigger |

|

items('For_each')?['Name'] |

Iteration of value within Name output property |

- On Blob name add a file name of choice. I’ve used a dynamic naming to accommodate multiple files if run multiple times a day like below,

Current UTC date

time_ Iteration of value within Name output property

Mine looked something

like this,

concat(utcNow(),'_',items('For_each')?['Name'])

|

Expression |

Definition |

|

items('For_each')?['Name'] |

Iteration of value within Name output property |

Azure Data Factory (ADF) Pipeline

- On a new pipeline add a Look up activity > Add dataset containing site information (Setup linked service if necessary).

I’ve used an excel file saved on Azure Data Lake (Gen 2) that includes,

Site : SharePoint site in text for (include site within double quotes =”site”)

Category : Category Name

Path : Folder Path (Folder Path starting with a /)

- Add a for each loop > Add output of the lookup activity as Items.

- Within the activity add a web activity > Add URL (Found on the When a HTTP request is received trigger after saving) > Add Body (JSON structure including values to properties initialized on the Logic App).

|

Expression |

Definition |

|

@{item().Site} |

Site column value passed iteratively from the excel containing

site information. |

|

@{item().Path} |

Path column value passed iteratively from the excel containing

site information. |

|

@{item().Category} |

Category column value passed iteratively from the excel containing

site information. |

- Add a trigger and publish or run manually as desired. The output folder will look like this.

Note: I’ve

not synchronously called the logic app using ADF. To get an idea on how to do so, check out

this article by MSSQL tips.

Hope this comes in handy!

Cheers!

Thanks for this blog, keep sharing your thoughts like this...

ReplyDeleteWhat is Microsoft Azure

Microsoft Azure Benefits

Thanks! Appreciate it.

DeleteThanks for this blog keep sharing your thoughts like this...

ReplyDeleteHTML5 Training in Chennai

HTML5 Course in Chennai